In a recent post by Larry Ferlazzo, Yet Another Nail-In-The-Coffin of AI Writing Detectors, he offers even more evidence dispelling the myth that AI detection software work. Even if AI detection can sometimes correctly identify whether or not a human wrote a piece of writing, they are so often wrong that they cannot be trusted.

I started teaching in 1991 when search engines were still young, pre-Google, and when checking for plagiarism meant looking through encyclopedia (fun fact, I tried selling encyclopedias once when I was 19 years old – I only managed to sell one set before I gave up thinking that people should NOT be spending their money on these anymore! LOL). Encyclopedia were much easier to plagiarize from than library books. If the student went through the work of looking for books in the library and reading through them for information, that would have been pretty cool!

Then came Google and educators saw their worlds turned upside down. We had to switch from being the content experts and from relying on our textbooks to be the major source of information to acknowledging that more information than we could ever track was at our students’ fingertips. Ever since then we’ve prioritized the development of what they call “soft skills” such as empathy and grit to name a couple. The R’s, Reading, wRiting, and aRithmetic, gave way to the C’s, Collaboration, Creativity, Cooperation, and Critical Thinking. Problem-Solving and Project-Based Learning (PBL) started to gain traction.

Checking for cheating or plagiarism was proving quite difficult if you had more students than you could conceivably keep track of. If you taught just one class, as in elementary, you could get to know the writing style of all your students quite well. With 150 or so students teachers could still tell when students were not writing papers themselves but some could argue that they did even against our best judgement.

Then came plagiarism checkers. They got pretty good. Checking text against content on the Internet was something software could do, so teachers were able to check student work rather quickly and be able to tell their students how much they plagiarized by percentage. Now with AI generating completely original content, plagiarism checkers are of no use so software developers and plagiarism checking services had to design AI Detectors. In theory a good idea and one that will alleviate many teacher’s concerns over figuring out if students used AI ChatGPT’s to write their papers or not. In theory a good idea but in practice they aren’t working all that well. And even as quickly as AI Detectors can improve, ChatGPT’s also improve so we cannot rely on them.

So in his post, Larry shares what teachers can do instead of going to AI Detectors. He shares six things we can do instead of using AI detectors copied here directly from his post with the links he includes:

Yet Another Nail-In-The-Coffin of AI Writing Detectors by Larry FerLazzo.

- Provide students with specific guidance for using AI.

- Teach them about the benefits and dangers of AI.

- Modify lessons so that it is more difficult for AI to be useful when completing assignments.

- Develop lessons where students can use AI appropriately.

- Invite students to create class guidelines on AI use.

- Have a handwritten formative assessment at the beginning of the year and every quarter so you can have an accurate sense of each student’s writing skill and style

I have shared here how I start by co-creating a classroom AI policy with my students to provide them guidance on what is considered cheating and what is okay. I don’t do that because cheating is so horrible, what we consider cheating in schools is standard operating procedure in the “real world” so it’s NOT about cheating, it’s about learning. By having an encyclopedia or Google or an AI do all the work or writing, we are not learning the content ourselves. And even if we are brilliant at taking the words in an encyclopedia or website or AI generator and creating beautiful looking essays, reports, poster, display boards, blogs, websites, presentations, or videos we still need to learn enough content to understand whatever problems we will need to solve in our everyday lives much less be competent at our chosen career!

So I don’t even call it cheating, I tell students what they should not do with AI or Google research or searching so they will still LEARN. Copying and pasting something that you don’t read or just skim over doesn’t provide any learning. I have my students DOING a lot so when I ask them to consume some information to learn some basic Science or vocabulary, I don’t need them cutting corners. Larry mentions teaching the dangers of AI and while that includes inherent biases and the possibility of hallucinations, there’s also danger in having the AI do the heavy lifting and the human doing little to nothing but presenting the outputs without understanding much of what the AI has generated.

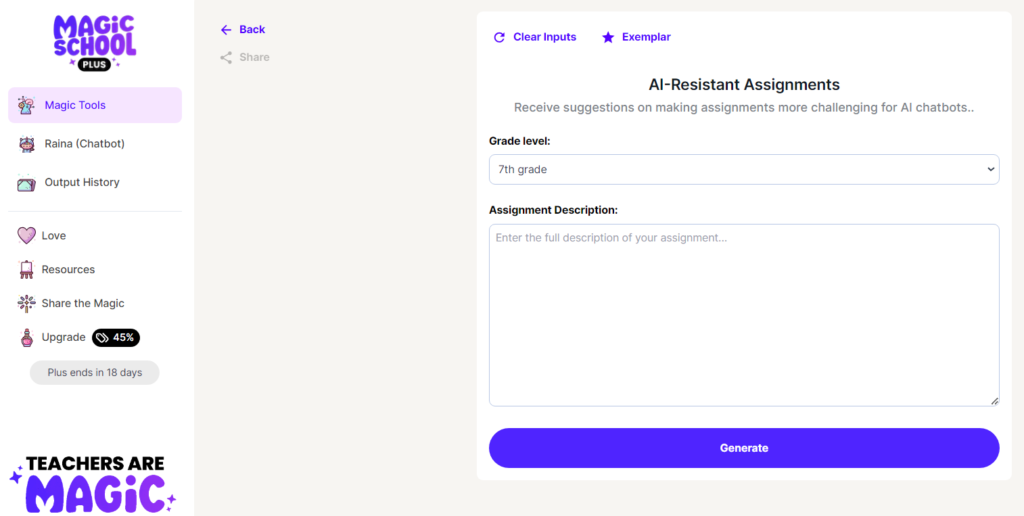

In terms of modifying lessons so that they are more difficult to use AI to complete, that suggestion brings me back to the early days of Google when we actually had workshops called, Writing UnGoogleable Questions! Yeah, that was a thing! We went through this before and we’re going through it again. I am hopeful that all these changes will make education more relevant and interesting to our students so they don’t have to do all their real learning outside of our classrooms. And speaking of AI-Resistant assignments, MagicSchool AI has a tool for that!

Larry also shares that he has his students complete handwritten formative assessments regularly, which is something that many teachers already do! I mean, we are just talking about good teaching here, which includes checking on students and teams as they are learning and working through their assignments, activities, labs, and projects. If we aren’t lecturing all the time then we can give mini-lessons (or Flip our classrooms) so that students are actively working in class as we walk around and check in with students. As we monitor their process we don’t have to rely on a final assessments only to determine what our students know. And if they are taking writing assignments home, then plan on them “cheating” or getting some help from parents, tutors, Google, ChatGPT, whatever they can get their hands on. And that type of behavior is only exacerbated when we punish and reward kids with high-stake grades based on their homework.

There are so many things that need fixing in education and every time something like this happens, Google, the pandemic, ChatGPT, I hope it is the catalyst that will force positive student-centered changes in education on a system-wide level! I know, wishful thinking. But I still hold out hope. At least we, the classroom teachers, can make the changes necessary to motivate and engage our students in relevant learning that will prepare them for their futures!